How Siri Works: What Really Happens When You Use the ‘Hey Siri’ Activation Phrase

Always Listening, But Not Recording

When you enable “Hey Siri” on your iPhone, it means that the microphone is always on and listening for the activation phrase. However, it’s important to understand that no audio is ever recorded or sent to Apple until after you say “Hey Siri.” Instead, your iPhone is continuously analyzing the audio using an on-device machine learning model to match against the “Hey Siri” activation trigger. Once a match is detected, this activates Siri to listen for the full voice command. Some key things to note - the audio being analyzed is never saved or leaves your device. It is also overwritten within a few seconds so nothing is permanently stored. This localized matching prevents any actual recording or sending of private conversations while still allowing for low-power always-on listening.

Personalizing The Trigger Match

When setting up “Hey Siri”, you are asked to say the activation phrase a few times so it can analyze your unique voice signature. This personalized model is then matched together with the general “Hey Siri” model. Requiring a dual match helps prevent accidental triggers from other voices nearby. The customized model of your voice is also never leaving your iPhone. Only when you say “Hey Siri” and it matches both models locally, is Siri activated to listen to the full command. Again, no audio is leaving your device until the trigger is detected through these on-device matching processes. Personal data like your voice signature is also deleted when disabling “Hey Siri” in settings.

Improving Response Times Over Time

While no audio is recorded unless “Hey Siri” is detected, Apple notes the audio analyzed for matching is “continuously overwritten.” However, one overlooked benefit is this allows improving Siri’s response times. By running detection locally without sending data, it means Siri can get faster at recognizing the trigger over time based on the usage patterns of individual users. This helps Siri activate more quickly for an improved experience while still preserving privacy.

Hardware Components Involved

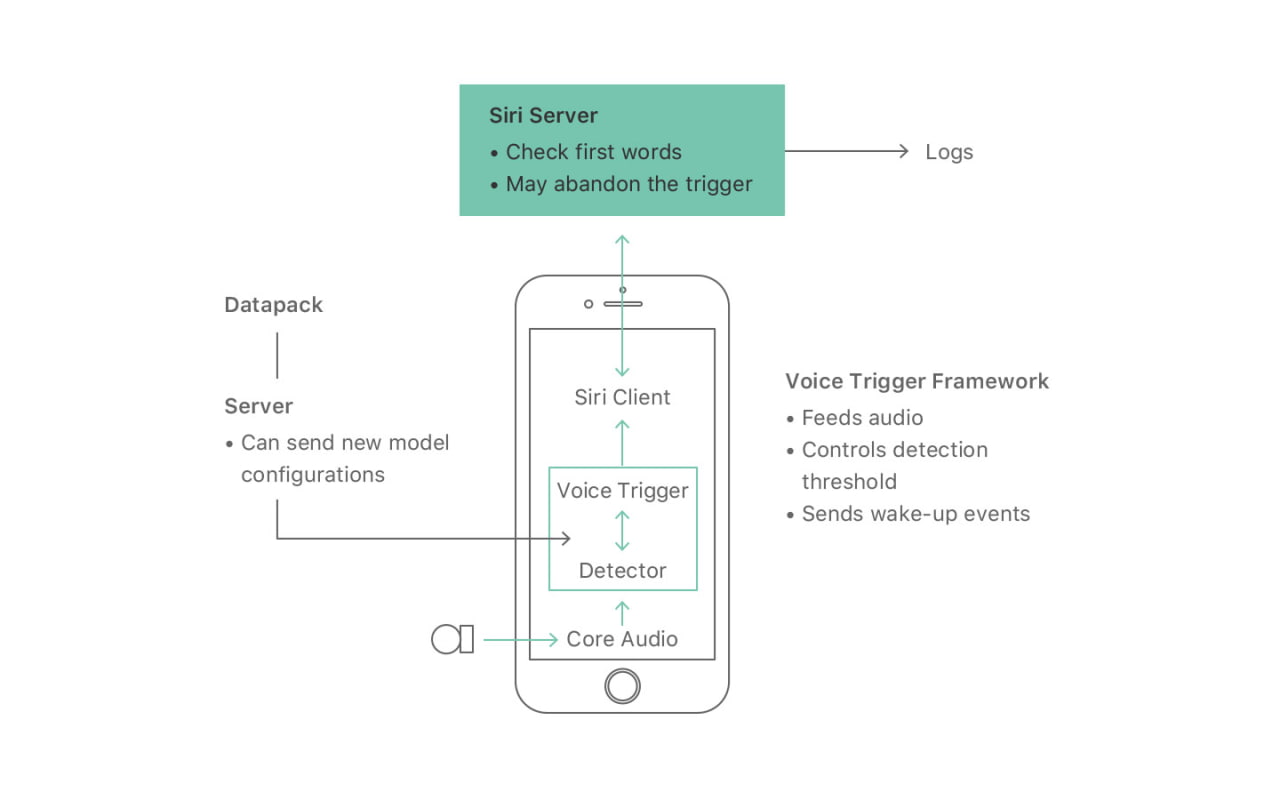

Behind the scenes, it’s not just your iPhone’s main processor continuously doing this audio analysis. A separate always-on chip designed for low-power machine learning is tasked with listening and doing the initial trigger matching. Only if matching is detected does it then hand off to the main CPU for additional verification and activating Siri itself. This more efficient hardware partitioning is key to allowing “Hey Siri” to work without draining battery life from always listening.

In Summary

By utilizing localized machine learning and low-power specialized hardware, Apple has found a clever way for iPhones to support “Hey Siri” without compromising user privacy or battery life. No audio is permanently stored, recorded or sent off-device unless the trigger is activated through pattern matching. This localized approach means Siri gets faster over time based on individual usage patterns, while preventing any leak of private conversations to Apple.

How Siri Works: What Really Happens When You Use the ‘Hey Siri’ Activation Phrase

Protecting Privacy Through On-Device Processing

There was early speculation about whether “Hey Siri” would involve recording and sending off private audio data to improve Siri over time. However, Apple has designed it to do all audio processing and matching directly on your iPhone. They took a strict stance that no audio would leave the device or get recorded without consent. To achieve this, they leveraged specialized low-power machine learning chips and data compression techniques. This localized approach means your iPhone can continuously analyze audio without draining battery life. It’s also able to recognize the trigger phrase more quickly based on individual usage patterns over days and weeks. But because the detection occurs entirely on-device, it avoids the privacy issues of sending private conversations into cloud databases. By implementing localized ML processing, Apple found a way to gain the benefits of an always-on assistant while prioritizing user privacy through on-device computation.

Double Verification Reduces False Triggers

To prevent accidental triggers from surrounding conversations, “Hey Siri” requires matching both a global and individual model for verification. When setting up the feature, users enroll by saying the phrase a few times. This captures their unique speech patterns and trains a personalized voice signature model. Later when listening, it checks for alignment against this model as well as the shared “Hey Siri” model. Requiring confirmation from two independent models greatly reduces false positives from other speech. And because they are processed directly on the iPhone, there is no risk of any voice recordings ever leaving the device during verification. This layered security through localized dual model-matching helps Siri activate smoothly without mistakenly recording innocent conversations.

Hardware Optimizations Extend Battery Life

Making “Hey Siri” always-available posed challenges for battery life since continuously listening and processing would drain power quickly. To address this, Apple leveraged specialized on-chip machine learning accelerators designed for low-power speech detection. Only these dedicated low-power cores listen passively for the “Hey Siri” detection. They can run inference efficiently without engaging the power-hungry main CPU. If a match is found, processing shifts over to ensure it wasn’t a false trigger. Otherwise the lightweight detection hardware gently monitors without significant battery impact. Through clever hardware partitioning, continuous listening was made practical for modern mobile devices and their users.

How Siri Works: What Really Happens When You Use the ‘Hey Siri’ Activation Phrase

Personal Data Is Safely Deleted

An oft-asked privacy concern is what happens to sensitive data like voice signatures after “Hey Siri” is disabled. Some services indefinitely retain predictive models trained on customers. However, Apple designed their system to avoid this issue. If a user elects to turn off the feature, all personal data and customized models used for detection are safely discarded. The stored enrollment audio snippets and computed voice identification models get wiped. Siri’s understanding then reverts back to just the generalized trigger phrase recognition. By deleting personally identifiable assets when the feature toggles off, there are no residual voice databanks accumulating on Apple’s servers. Individual enrollment data never left the device during use, and is scrubbed properly afterwards. This helps give users confidence their speech won’t be repurposed without consent when optionally enabling new assistive capabilities.

Full Control Means Empowered Choices

With personal data always staying local, users retain full sovereignty over their information and use of Siri/Hey Siri. The feature can toggle on or off freely via simple settings changes. No re-enrollment or permission updates are needed—the user securely enables and disables functionality at will on their own devices and schedule. This high degree of individual autonomy over speech data and options to engage new technologies bolsters consumer trust. People can experiment freely, then disengage fully with a tap if desired. Being in control nurtures confidence that enrollment wasn’t a veiled attempt to hoard data long-term in centralized repositories. Apple’s approach makes “Hey Siri” an assistive option users can enable and disable based on their comfort levels over time.

Conclusion

Through localized machine learning and strict policies on data ownership, Apple has shown how privacy can coexist with AI assistants. By prioritizing on-device processing and giving full autonomy over usage, “Hey Siri” is able to utilize personalization without accumulating consumer voice profiles beyond what’s needed in the moment. Individual control empowers choice, building trust that individuals remain firmly in ownership of their own information and engagement with technologies. This balanced design demonstrates how privacy and personalization can advance together through clever engineering solutions.