Comparing Performance Between Supercomputers and Personal Computers

Modern supercomputers are built using the same types of processors and hardware found in personal computers, but the technology behind their superior performance lies in their massive scale and specialized networking and software capabilities. Here is a detailed comparison between these high-performance computing systems and personal computers broken into several parts.

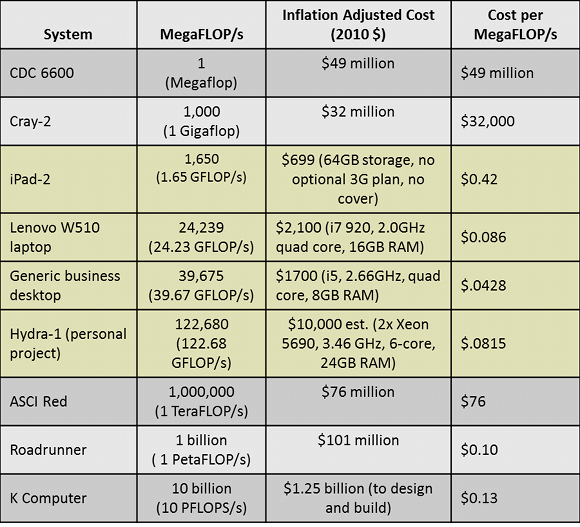

Moore’s Law Driving Exponential Performance Gains

According to David Ecale, a regular personal computer today would be comparable to a leading supercomputer from around 30 years ago due to exponential gains following Moore’s law, which states that the number of transistors on integrated circuits doubles approximately every two years. This rapid pace of advancement through incremental improvements has boosted supercomputer performance by around a million times over the past three decades. Though personal computers also benefit from Moore’s law, supercomputers are able to achieve far greater performance by harnessing huge numbers of latest generation processors working in parallel.

Utilizing Commodity Hardware at Scale

Modern supercomputers are built using the same central processing units (CPUs) and graphics processing units (GPUs) that power personal gaming rigs and workstations. However, supercomputers contain vast numbers of these components - it is not uncommon for high-end systems to house over 100,000 CPU cores and petabytes of memory. For example, one decommissioned machine contained nearly 7,000 nodes, each with two multi-core Sandy Bridge processors. By aggregating enormous quantities of commodity hardware, interconnected via very high speed networks, supercomputers are able to tackle exponentially more complex problems compared to a personal computer.

Specialized High-Performance Networking

While the raw computing hardware may be similar, one of the major differences that enables supercomputers to outperform personal machines is their high-bandwidth, low-latency interconnects. State-of-the-art networks allow any of millions of processor cores to communicate with any other in about one microsecond, achieving around 100 gigabits per second of bi-directional bandwidth. This permits the cores to collaborate intensely on parallel problems. Personal computers lack this ability, as their components communicate via comparatively slow PCI-e buses. Novel interconnect technologies like silicon photonics aim to bring the performance of supercomputer networks to all systems.

Advanced Parallel Software Methods

In addition to their immense scales of parallelism, supercomputers leverage sophisticated software tools that allow millions of cores to cooperate on solving grand challenge problems no single machine could handle alone. Modern parallel programming approaches like message passing interface (MPI) and dense linear algebra routines in Scalable Parallel Libraries (ScaLAPACK) provide standard ways for applications to divide work and communicate results. Though personal machines also benefit from multi-threading, supercomputers employ methods that exploit parallelism over far greater numbers of cores and nodes. Subject matter experts can now write programs to effectively utilize thousands of cores, a task that would have seemed nearly impossible just a decade ago.

Combining Technology to Solve Complex Problems

To summarize, while utilizing similar fundamental computing components, supercomputers diverge from personal machines in their abilities to harness huge numbers of processors, fast interconnects allowing system-wide communication, and sophisticated parallel algorithms. Simply aggregating compute resources is not enough - it takes dedicated facilities, air conditioning capable of dissipating megawatts of heat, electrical systems to power hundreds of thousands of components, and a software corps willing to optimize applications. When all these factors converge, incredible progress can be made on hard problems in fields like climate modeling, molecular dynamics, astrophysics and more that were previously unsolvable or would have taken dramatically longer using a single computer or smaller cluster.

High Performance Computing Continues to Progress

In the future, continued expansion of Moore’s law coupled with technical advancements like silicon photonics, 3D chip stacking through multilevel substrates, and even quantum computing principles have potential to further enhance supercomputer networking and performance. Programming models may mature to better abstract parallelism while new algorithms creatively apply existing techniques in novel ways. Meanwhile, personal computers will also benefit from integrated components offering more cores, higher bandwidth memory subsystems, and potentially even component-level on-chip parallelism through technologies like multidimensional die stacking. However, only by aggregating and coordinating unthinkably large numbers of nodes can supercomputers unravel mysteries at the forefront of science and engineering. Advanced computing will continue pushing boundaries to solve humanity’s grand challenges.

Massively Parallel Supercomputers Enable Scientific Discovery

While an entertaining exercise, simply running the Unix date command across 100,000 nodes would not fully exercise a modern supercomputer or justify their immense costs. The true value lies in their ability to tackle problems that previously seemed intractable or would have taken infeasibly long using conventional resources. Examples include dynamically modeling planet-scale environmental phenomena, simulating molecular and biological interactions at extreme precision, designing high-energy density materials, and discovering new fundamental particles through analysis of high-energy collider data. Looking ahead, exascale supercomputers may help humanity address issues like developing sustainable fusion energy reactors, understanding the human brain, or predicting climate change impacts. With careful collaboration between domain scientists, applied mathematicians, and computer engineers, massively parallel supercomputing will continue unlocking insights to address society’s most pressing challenges.